If all is well you should see filebeat log saying Harvesting Data from your log path. Note: ELK server should be up and running and accessible from your spring boot server. I would suggest trying the elastic first. Note: You can enable output to either Elastic or Logstash so uncomment any one section. home/user/log/*.log // add your log pathĬhange IP for ELK in Elastic/Logstash and Kibana section in the same file. Open Filebeat conf and add your log location.

#Spring boot docker syslog install#

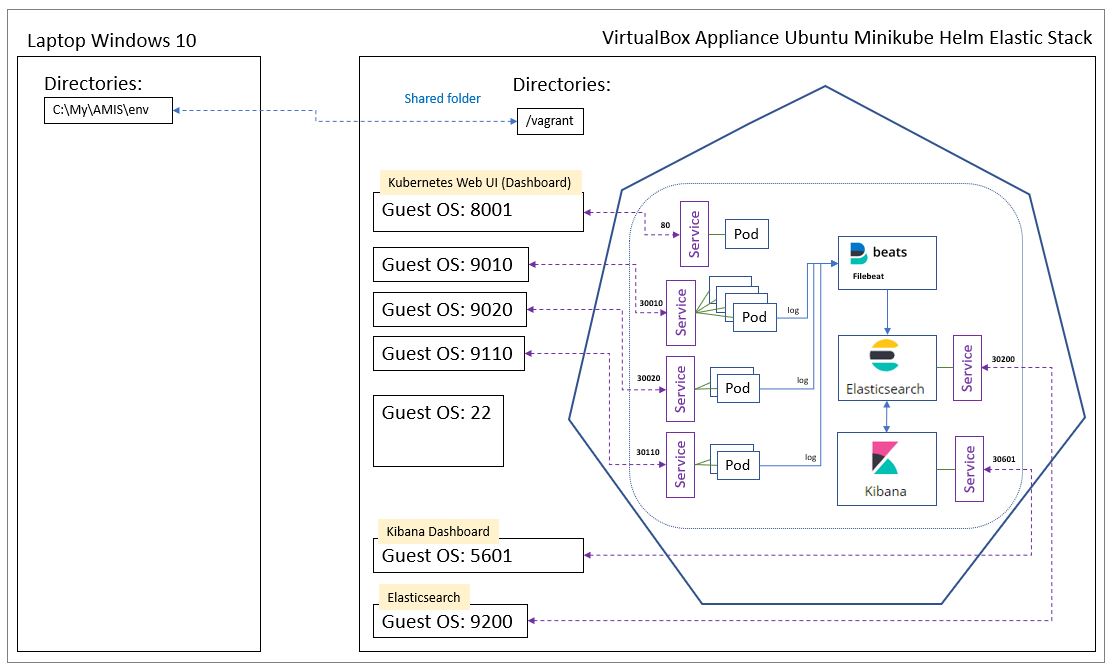

You need to install Filebeat curl -L -O Ģ. Let's assume you have configured your Application to store log at /home/user/log/ folder.ġ. Let's assume you have a Spring-boot Application running on top of Ubuntu Server. You can send Data from a Source to ELK by using Data Collector. To avoid the parsing issue in the first part, you'd actually need to log JSON to the console and then collect that. Then, we can see the spring.log file in the /path-to-host directory. To do this, we can run our application container with the command: > mvn spring-boot:build-image -v /path-to-host:/workspace/logs. Then Filebeat can automatically collect all the Docker logs (Filebeat will need to have access to /var/lib/docker/containers:/var/lib/docker/containers:ro for this to work): todiscover: If we must access log files from the host filesystem, we have to create a Docker volume. I'd use the default JSON log that Docker is providing, so your Java application can simply log to console: # Collect the JSON log files from the Spring Boot apps Then you can collect that file and send it to Elasticsearch all the fields will be nicely extracted and your multiline log statements (like stacktraces) won't be broken up either: filebeat.inputs: I'm using Logback here and you'll need to add :logstash-logback-encoder dependency: To fix the first problem, add a log appender to log to JSON. If you have a more dynamic container setup, you do not want to log to files since the setup of all the bind-mounted directories or files is a PITA.Because nobody wants to write regular expressions for parsing and it's error prone.

You do not want to parse your log files.Note that if we're running our containers in Swarm mode, we should use the docker service ps and docker service logs commands instead. The -f option behaves like the tail -f shell command: it echoes the log output as it's produced. We can see that, despite the json-file driver, the output is still plain text - JSON is only used internally by Docker: $> docker logs -f 877bb028a143ġ72.27.0.1 - "GET / HTTP/1.1" 200 4369 " Then, we can display our container logs with the docker logs -f command. Let's see how it works with our Docker Compose example.įirst, let's find our container id: $> docker psĨ77bb028a143 karthequian/helloworld:latest "/runner.sh nginx" We can find in the Docker Compose documentation that containers are set up by default with the json-file log driver, which supports the docker logs command. After building the image successfully now you can run the container using the following command.

Run the docker container using docker-compose up -d. As soon as we run multiple containers at once, we'll no longer be able to read mixed logs from multiple containers. Spring Boot has a LoggingSystem abstraction that attempts to configure logging based on the content of the classpath. As you can see from the logs we have successfully build the image and tagged it with - jhooq-spring-boot-docker-compose:1.

0 kommentar(er)

0 kommentar(er)